Why Automating High Level Software Testing?

On our Behalf

How to write an article about test automation and our passion in times of ChatGPT? Does an article as such still make sense and if so, in what form?

After some thought, we decided to write the article with the help of ChatGPT, but to write it in two parts:

- Part 1: Our personal story with test automation.

- Part 2: General considerations about test automation, which turned out to be relevant to us. We describe them in the form of check lists to have it as short as possible.

In the first part we bring in personal experiences and thoughts that are not found in this form in ChatGPT, at least we think so.

The second part contains more general and exhaustive information.

Part 1: Our Personal Story

At wega we have a lot of activities around software validation in order to get formal proof of the proper functioning of applications used in regulated pharmaceutical environments.

One of these activities is manually executing test scripts and thereby following precisely defined test steps in the system to be validated. These scripts are pages of instructions that have to be executed very precisely, step by step.

- When some of us did this for the first time, there were some mixed feelings and thoughts:

- Whoa, this is really taking all my concentration.

- Oh no, I just skipped step 9!

- I'm bored. How can that be? It's challenging, needs full concentration, and it's exciting to discover this application after all. But still, this task is really tiring. I feel like a robot...

And that was the magic word: “Robot”! Let's automate!

Thinking about what can be automated and what not, and where machines are better than humans revealed a new and fascinating way of testing to us. We also began to understand the difference between testing and checking as described for example on tutorialspoint.

Testing in contrast to checking involves exploring the system to evaluate if it behaves as expected; if the users feel comfortable with it and are supported in their tasks by the system, if potential human errors in handling the system are acceptable, if the quality of the system is as expected, if the look and feel is appealing, and ultimately to provide a holistic feedback on the system under test. Testing is a creative and exploratory process that requires human judgment, experience, and skill. This cannot be automated.

On the other hand, checking is verifying that a system or component meets a set of predefined requirements or specifications; in our case, high level requirements. Scripts are used to compare the actual results of a test with the expected results to determine whether the system under test is performing correctly. Checking is a rather structured and procedural process that can be executed using a test automation tool.

But even though a precise step-by-step description in a test script can be automated according to this definition – does it really make sense?

As often, the answer is: It depends – and that is true, it depends on the goals you want to achieve by using test automation.

So far, we've experienced three different goals that could be achieved with test automation:

- Reducing testing effort and costs while increasing reliability when you have a use case where the same test script needs to be executed repeatedly, for example when performing regression tests.

- Reducing the test execution time is also valuable. Let’s say you have a new software feature that was just released and needs to be tested quickly, having test automation scripts available to be executed allows you to greatly reduce the test execution time compared to manual testing.

- Increasing reliability and user satisfaction by implementing system monitoring. Life Science digitalization is leading to the creation of applications’ ecosystems that need to communicate with each other in more or less real time. But when dealing with applications sold as SaaS, it becomes more difficult to manage releases and their impact on the entire ecosystem. If we include the fact that there may still be IT activities, such as patching and maintenance, taking place outside office hours, this adds up to a lot of factors that are out of our control, potentially bringing crucial day-to-day business processes to a complete standstill. In such a case, the added value of test automation seems enormous to us: there is certainly a greater initial investment to develop the test scripts that will enable you to cover all the crucial processes, but once this is in place, there's nothing to stop you from scheduling the run of all the tests every weekend, or even every night! You can program your tool to send you a notification in case of defect detection, enabling you to act upstream; maybe you can solve the issue before the users would even realize there was a problem, or in the worst-case scenario you can at least warn them that the problem is known and being dealt with, avoiding at the same time a lot of support requests.

To sum up, we'd like to highlight a few of the experiences we made:

- Already while writing automated test scripts, you can test the system very precisely. The script needs to be defined in its last detail, and you cannot describe it in a ‘more or less’ manner as it could be done in scripts for manual execution.

- Errors in the test script are immediately detected when you write automated test scripts: as the test execution is very fast, you can execute it repeatedly to check the quality of your script. In contrast, errors in the test script for manual execution are only checked during the dry runs performed by a tester clicking through each step and verifying that it is written in an understandable and unambiguous way for humans.

- And last but not least: Writing automated tests is fun! Unlike manually executing test scripts, we can now observe the mouse moving and clicking in the application under test all on its own. After spending hours doing this manually, this is a very special satisfaction.

Part 2: Considerations on Test Automation

Introduction

In software development, testing is a critical step in ensuring that the software meets the desired requirements and functions as intended. Manual testing can be time-consuming, error-prone, and inefficient, especially when testing large or complex software systems. Test automation, therefore, has become increasingly important in modern software development.

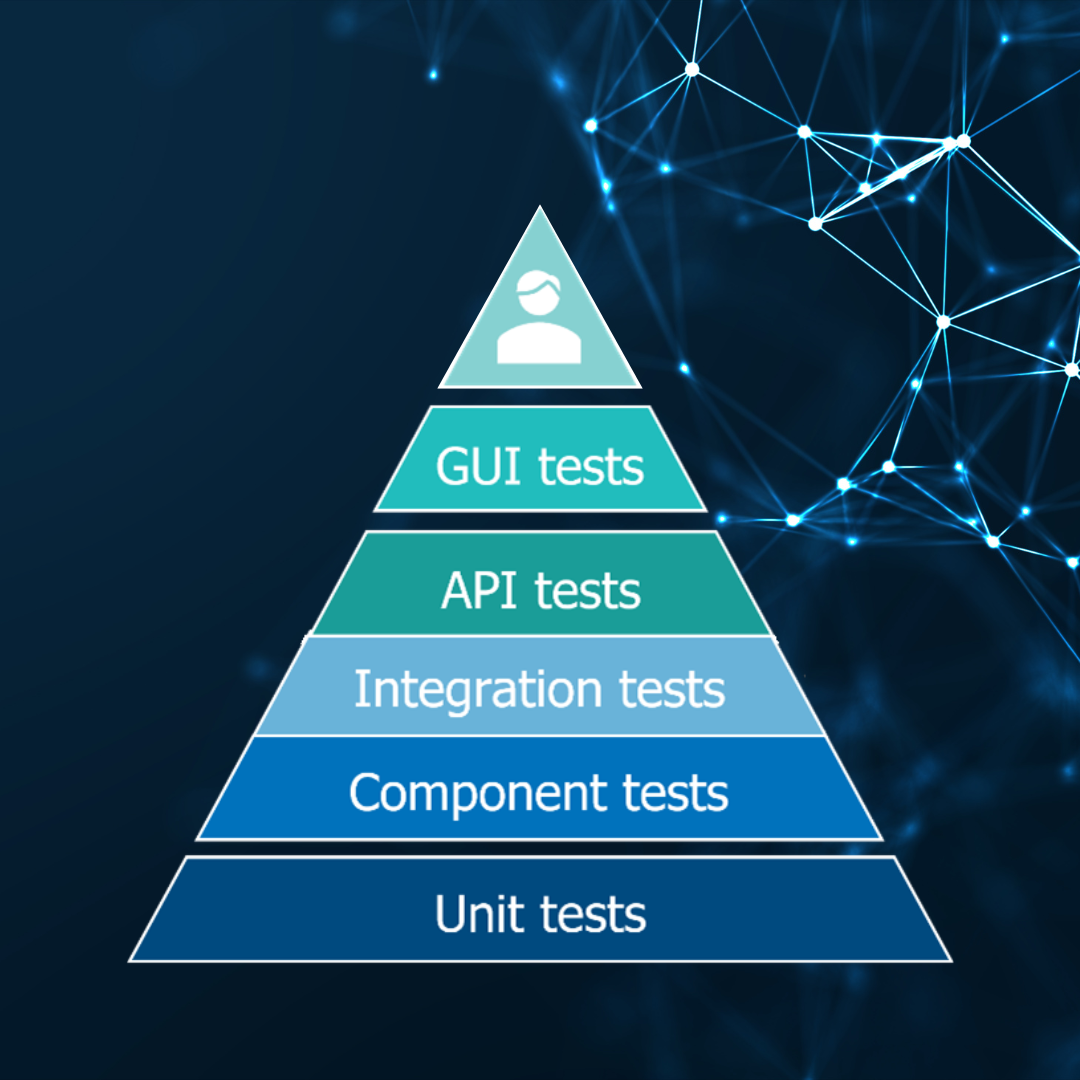

Test automation involves the use of software tools to automatically execute tests, compare actual results to expected results, and generate detailed reports of test results. The aim is to speed up testing, improve test accuracy, and reduce the risk of human error. Test automation can be applied to various types of tests, including unit tests, integration tests, system tests, user interface testing and regression tests.

However, test automation is not a one-size-fits-all solution, and it requires careful planning and execution to be effective. It is important to identify the right tests to automate, ensure that the test automation framework is robust and maintainable, and continuously monitor and update the tests to keep pace with changes in the software. It is also important to balance automated testing with manual testing, as some scenarios may be difficult or impractical to automate.

Benefits and Drawbacks of Automated High-Level Software Testing

Automating high-level software testing can provide several benefits, including:

- Increased Efficiency: Automated testing can run faster and more consistently than manual testing, allowing testing to be done more quickly and efficiently.

- Improved Accuracy: Automated tests can eliminate human error and provide more accurate results than manual testing.

- Better Test Coverage: Automated testing can cover more scenarios and test cases than manual testing, ensuring that all parts of the software are tested thoroughly.

- Cost Savings: Automated testing can reduce testing costs, especially for regression tests, because the time required to execute the tests is reduced.

- Faster Time-to-Market: Automated testing can help to speed up the development process by quickly identifying bugs and issues that need to be fixed.

While there are many benefits to automating high-level software testing, there are also some potential drawbacks to consider. Here are some reasons why you might not want to automate software testing:

- High Initial Investment: Automating software testing requires significant upfront investment in terms of time, resources, and expertise. Additionally, licenses respectively royalties are incurred. This can be a barrier for smaller teams or organizations with limited resources.

- Limited Scope: Automated tests can only test what they are programmed to test, which may not cover all possible scenarios or edge cases. This means that manual testing may still be necessary to ensure complete coverage.

- Maintenance Costs: Automated tests require ongoing maintenance to ensure that they continue to function correctly as the software changes over time. This can be time-consuming and costly.

- False Positives: Automated tests can sometimes produce false positives, where a test result indicates a problem that doesn't actually exist. This can lead to wasted time and resources investigating non-existent issues.

- Lack of Human Insight: Automated testing can't replace the human insight and creativity that is sometimes needed to identify subtle or complex issues that may be missed by automated tests.

Overall, automating high-level software testing can provide significant benefits by improving efficiency, accuracy, test coverage, cost savings, and time-to-market.

Nevertheless, it is important to consider the potential drawbacks and weigh the costs and benefits before deciding whether or not to automate.

Considerations on Building up a Test Automation Suite

When writing a test automation suite, there are several important considerations to keep in mind to ensure that the suite is effective and efficient. Here are some key factors to consider and potential problems to avoid:

- Test coverage: Ensure that the test automation suite covers the critical automatable scenarios and functionality of the system. Not covering important use cases can lead to missed defects, while over-testing can lead to inefficient test runs.

- Test environment: Ensure that the test environment closely matches the production environment, including the hardware, software, and network configurations. Differences between the environments can lead to false test results or missed defects.

- Test maintenance: Ensure that the test automation suite is maintainable and scalable. Changes to the system or requirements should be reflected in the test automation suite, and outdated or obsolete tests should be removed.

- Test reporting: Ensure that the test automation suite provides clear and actionable reports, including test results, logs, and screenshots. Poor reporting can make it difficult to identify and diagnose defects.

- Test execution: Ensure that the test automation suite can be executed reliably and efficiently, with minimal manual intervention. Unreliable or inefficient test runs can result in wasted time and resources.

- Test framework: Ensure that the test automation suite is built on a robust and scalable test framework. An inadequate or poorly designed framework can lead to maintenance issues, unstable tests, and difficulty in scaling.

Some potential problems to avoid when writing a test automation suite include:

- Focusing on quantity over quality: It's important to ensure that the tests in the suite are effective and efficient, rather than trying to cover as many scenarios as possible.

- Neglecting maintenance: Test automation suites require ongoing maintenance to remain effective, and neglecting maintenance can lead to outdated or obsolete tests.

- Inadequate test data: Test data must be accurate, relevant, and up to date to ensure effective testing.

- Over-reliance on automation: It's important to balance automated tests with manual testing, as some scenarios may be difficult or impractical to automate.

- Poorly designed test framework: A poorly designed test framework can lead to maintenance issues, unstable tests, and difficulty in scaling.

By considering these factors and avoiding potential problems, you can create an effective and efficient test automation suite that helps ensure the quality of your system.

Consideration on the Choice, Input and Usage of Test Data

When automating tests, you need to bring in test data that covers various scenarios to ensure that the system behaves as expected under different conditions. Here are some options to bring in test data and considerations to keep in mind:

- Manual input: You can manually input test data into your automated test scripts, but this can be time-consuming and error prone. It's essential to ensure that the data is entered correctly, or it can lead to false test results.

- CSV or Excel files: You can store test data in CSV or Excel files and import them into your automated test scripts. This approach can save time and reduce errors. However, it's important to make sure that the data is structured correctly, and the files are accessible and up to date.

- Database queries: You can query databases to retrieve test data and use it in your automated tests. This approach can be efficient, but it requires advanced knowledge of SQL and database management.

- APIs: You can use APIs to retrieve test data from external sources such as web services. This approach can be useful when testing integrations with other systems.

When bringing in test data, consider the following:

- Ensure that the test data is representative of real-world scenarios.

- Verify that the test data is correct and up to date.

- Consider the test data size and complexity.

- Plan for data maintenance, including updating the test data when necessary.

- Protect sensitive data in accordance with company policies and regulatory requirements.

- Consider the performance impact of large test data sets.

- Verify that the test data is accessible and can be imported into the automated tests.

When considering these factors, make sure that your automated tests are using relevant and accurate test data, which will increase the likelihood of detecting defects and ensuring the system's quality.