Flaky UI Automated Tests

Written by Virginie Rochat

What is a flaky test?

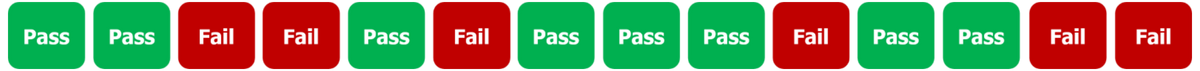

A flaky test is an automated test that generates inconsistent results, without any change being made to the automated test script. Such tests are known for their unpredictability, as they can fail or pass intermittently without any alterations to the code or the application under test, generating false failures and potentially false passes:

In short, flaky tests are tests which sometimes pass and sometimes fail, without any change to the production or test code. A survey conducted by Dorothy Graham (Graham 2024) in 2024 highlighted that flakiness is still in the top 4 of the biggest problems linked to test automation. Generally speaking, UI automation testing is seen as:

- Flaky

- Fragile

- Brittle

- Unreliable

- Having unexplained non-deterministic failures

Test Automation has its own Paradox: when running automated tests, we are actually testing two things:

- The System Under Test (SUT)

- The Test Automation Code

Knowing this, how can one establish how reliable the test automation solution is? Simple: by running it against the SUT many times.

Flaky tests are better than nothing… right?

The general initial assumption is that flaky tests are better than no tests at all. If a test can pass 99% of the time, then it can prevent bad commits most of the time. This assumption sounds reasonable, until we do the math:

Scenario 1

- Test suite with 300 tests

- Each test has a failure rate of 0.5% → pass rate = 99.5%

- What is the global impact of that across the entire suite of tests?

Test Suite Pass Rate = (99.5%)300 = 22.2%

Scenario 2

- Still a Test Suite with 300 tests

- Each test has a failure rate of 0.2% → pass rate = 99.8%

- What is the global impact of that across the entire suite of tests?

Test Suite Pass Rate = (99.8%)300 = 54.8%

In the first scenario, this means that ¾ of the time, the Test Suite will fail for no apparent reason! This is obviously less than ideal, and it is important to keep in mind that the more tests there are in the Test Suite, the lower the global pass rate will be…

The second scenario highlights that a decrease of 0.3% in the failure rate of the tests allows to reduce the unexpected behaviour by more than twice!

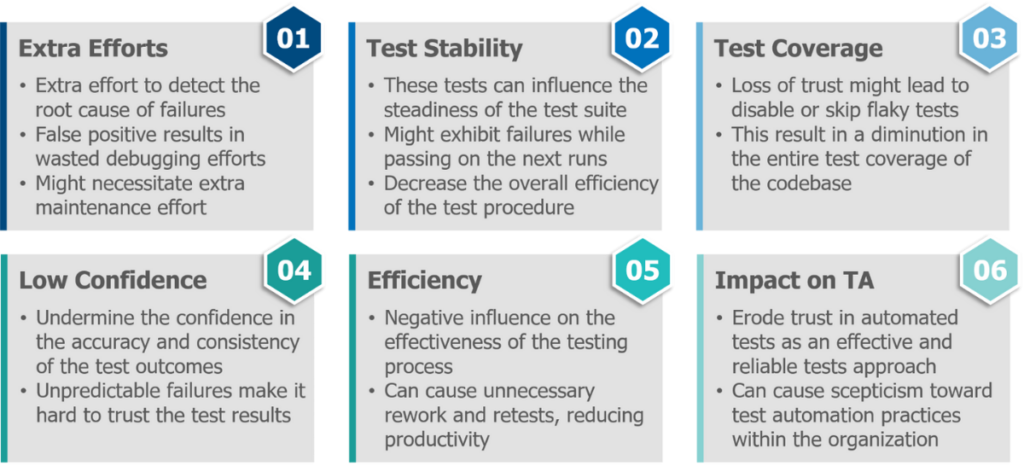

Now the question is to know if the initial assessment that a flaky test is better than no test is correct (spoiler alert: it’s not!) and for that, we need to have a look at the impacts of flaky tests:

The impact is indeed massive and will ultimately (but inevitably) lead to the final conclusion that automated UI testing is worthless.

So, to the question asked in the previous chapter, we can safely say that in fact, a flaky test is WORSE than no test!

Is the flakiness really random? Or is there a cause?…

In almost all cases, what people consider as a “flaky test” has a concrete root cause: investigate it, find it and solve it!

The most common causes for what is considered to be flakiness are the following:

- Test design: one of the most common reasons for flakiness is actually poor test writing/design! Flaky tests can occur due to issues related to incorrect locator strategies (such as identifying a button by its inner text when the latter is dynamic) or missing assertions

- Timing & synchronization issues: flakiness is also very common when there is a need for proper synchronization between test phases (webpage is not fully loaded yet when the test is executed), or when timing dependencies exist (test fails because the SUT takes longer than expected to load)

- Environmental factors: there are many environmental factors that can lead a test to fail when it shouldn’t, such as network connectivity issues, fluctuations in system resources (memory, CPU), dependencies on APIs or third-party services, etc.

- Unstable test environment: if the test environment is unstable, then so are the tests… By unstable test environment, one can mean an uneven test environment (such as different browser versions or operating system), an inadequate configuration (like incorrect path settings or missing dependencies/libraries), an unreliable test infrastructure or a partial test setup variation in the environment

- Async waits: an inappropriate handling of asynchronous waits and operations – such as network requests or UI updates – might introduce race conditions and timing problems

- Concurrency: an inappropriate statement about the order in which the varied threads are applying operations can also be a cause for inconsistencies

Preventing flakiness in tests

If there is a cause for a test to fail when it’s not supposed to, then the so-called flakiness can be prevented. Here are a bunch of tips and things to pay attention to in order to limit flakiness from scratch, as well as how to handle it:

- Fine-tune operation order allows to decrease concurrency and reduce dependencies (atomic tests). By carefully ordering test actions, one can control the odds of conditions or conflicts that can cause flakiness

- Build a stable and consistent test environment by ensuring that external factors (like system resources or network connectivity) are controlled, dependencies are managed, and the accessibility of essential resources for test implementation is confirmed

- Take time to think about the testing strategy prior to the implementation, because as previously mentioned, poorly written test, bad testing strategy and poor test design are major factors for test flakiness. It is crucial to start by a deep understanding of the SUT, and then to introduce naming conventions as well as best practices for writing tests

- Foster the feedback culture; implement continuous monitoring of test outputs and gather feedback from the QA team: encourage testers to report & address any potential flakiness they encounter, and regularly review and take proactive measures to resolve them

- Use suitable synchronization techniques in the test code or within the testing tool to confirm appropriate synchronization between the SUT and the test phase. Utilize explicit waits, wait conditions, or synchronization methods

- Optimize the testing timing by scheduling the runs while considering factors such as network congestion, system load and so on that might influence test reliability

- Institute proper test data management practices to retain reliability and consistency, and avoid using mutable or shared test data that can lead to flakiness

- Isolate the tests by reducing dependencies, and lessens the chances of interferences between tests

Key takeaways

- Do NOT fall down the rabbit hole of being accustomed to UI tests failing for no apparent reason!

- Learn from failure: engineering is a hypothesis; a failure is a starting point for a better hypothesis

- Don't hide failure, rather study it and improve

- In almost all cases, the so-called flakiness has a concrete root cause: investigate it, find it and solve it!

- If you cannot find the root cause of failure, your best option is to collect more evidence of the defect

- Flaky tests are a chance for better understanding the SUT and your test framework/tool!

References

Graham, Dorothy. 2024. “Test Automation Community Survey.” AutomationSTAR. https://automation.eurostarsoftwaretesting.com/wp-content/uploads/2024/10/AS-Test-Automation-Community-Survey-Results-Rev02.pdf?utm_source=testguild&utm_campaign=promo&utm_medium=post.